In my experience a new project is something software engineers yearn for – it’s an opportunity to start fresh and to right the wrongs of the past. Though of course you will make new mistakes and covet a blank slate again. Regardless, I think a new project is the best time to seriously think about your previous project. What feels easy and why? What causes daily friction and how could it be smoothed over? I have been working on a Python/Django web application for a few years and had just this opportunity to make some changes when starting a new project. This blog is going to look at some of the changes I decided to make when starting this new project and some of the things I intentionally kept the same.

What Changed:

Switch from Make to Task

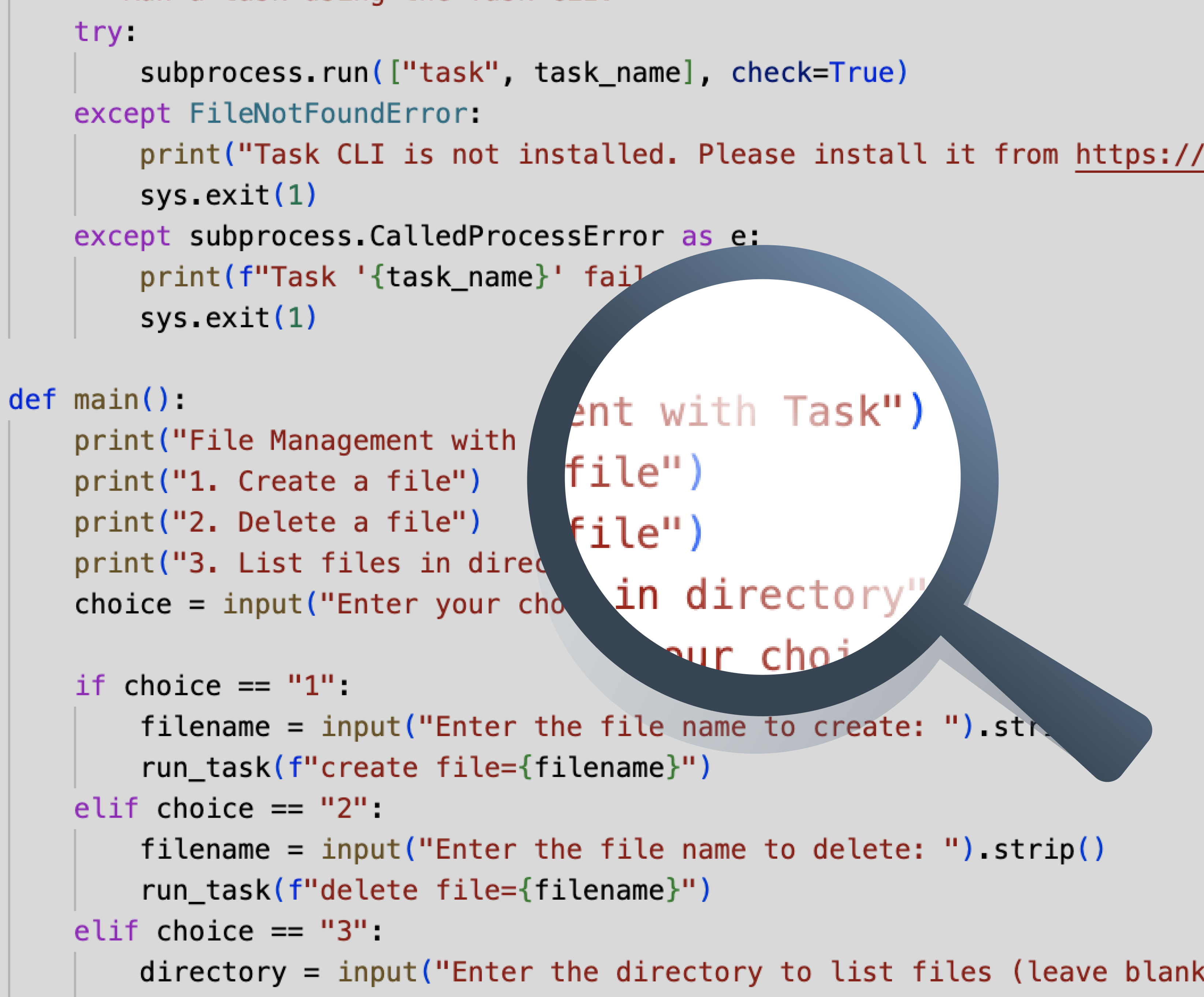

One of the minor technology updates for this new project was switching from Make to Task. Previously I had found the Makefile format to be less flexible and legible than I had wanted. I also found the make command itself less helpful than I felt it could be in the era of modern CLIs. I decided this would be a good opportunity to use a new task configuration and execution tool. After a bit of shopping around I found Task to be the best candidate and after a few months of usage I’ve been very happy with the switch.

The YAML configuration format of Task is much more legible, and the output of the task command felt much more helpful. For example Task allows listing all the available commands and provided descriptions (via task list), whereas finding Make targets requires using grep on the Makefile itself. There are also many additional options available such as marking certain tasks to run silently or define other tasks as dependencies.

Additionally, Task allows defining multiple task files in sub-folders as well as allowing you to set the working directory on a command-by-command basis. For example if your project has separate frontend and backend folders it would be easy to have two separate task files that define their own working directory. Then they could be imported in the master task file allowing the commands to be run from anywhere in the file structure while still maintaining separate frontend and backend namespaces.

Task also supports global tasks allowing me to replace my shell functions and aliases with task definitions that are consistent with my project specific tasks.

I think it is important to note that I am not declaring Task better than Make. Make was created specifically to build executables for projects, not to declare a list of executable tasks. Task is just more purpose-built for my specific use case.

Strict usage of an abstract base model on all models

I also wanted to take the opportunity provided by a new project to enforce some additional consistency in all the application models by using an abstract base model to define shared fields for all models. In Django an abstract base model allows you to define fields that will be created on each inheriting model without creating a table itself, therefore ensuring all models will have these fields.

For example the following base model defines a created_date and updated_date for all sub models.

class BaseModel(models.Model):

class Meta:

abstract = True

created_date = models.DateTimeField(auto_now_add=True, db_index=True)

updated_date = models.DateTimeField(auto_now=True, db_index=True)

Using this from the beginning will allow all models to have a historic created_date which can become vital for future features or investigative queries even if you didn’t originally think a model needed it.

Focus on test coverage

While good test coverage is a given for most developers, it can be difficult to maintain your team’s testing standards when developing connections to external services, request middleware, or other complex integrations. From the outset, I wanted to make sure that all of these more difficult to test pieces of code still had enough coverage to feel comfortable making changes in the future.

In order to do this I leaned into unittest library features such as the patch decorator and the django test override_settings decorator. The patch decorator allows you to override a specific function with another without relying on dependency injection or any other change to your production code. For example in order to test a service that performs some logic and then makes an external request you could write the following to test the logic without hitting the external service:

@patch("requests.post")

def my_test(self, requests_post_mock):

requests_post_mock.return_value = MockPostResponse()

...

The override_settings decorator is similar in that, on a test by test basis, you can override the value of a django application setting in order to more easily test situations that rely on specific settings. For example, the following snippet overrides the django middleware list to include authentication middleware in the test environment.

middleware = settings.MIDDLEWARE + [

"AuthenticationMiddleware",

]

@override_settings(

MIDDLEWARE=middleware,

)

class AuthenticationMiddlewareTestCase(LiveServerTestCase, BaseTestSuite):

def test_authentication_middleware(self):

self.client.cookies["token"] = "test-token"

response = self.client.get("/")

self.assertEqual(response.status_code, 200)

Though mocks certainly aren’t perfect and full end to end or integration tests should be preferred wherever possible, I found it is worth striving for fuller test coverage even when something takes a bit of creative thinking to test.

Prioritize test feedback loop efficiency

Having good test coverage is only half the battle, you also need to run those tests. In my experience the biggest barrier to running tests as often as would be most helpful is the time it takes to run the test.

To address the first issue I wanted to make running the tests as seamless as possible. Previously all tests were run through the CLI by providing a path to that test. I found this generated just enough friction for me to avoid running tests that weren’t necessary or avoid running tests as early as possible, instead waiting until the feature was finished. Tests provide the most value when run early and often so although this friction was small it had a noticeable impact on development efficiency.

In order to decrease the feedback time I spent some time setting up the PyCharm test runner to work with django in order to run tests via keyboard shortcut within the editor instead of requiring interaction with the terminal. The setup is very dependent on the configuration of your application so I won’t go into details, but I would recommend starting with the PyCharm quickstart here. This allowed tests to be run instantly without leaving the context of the editor. Though only saving perhaps five seconds per run, the mental load this shortcut removed was quite significant to the development experience.

What Hasn’t Changed:

Continue to use Django Rest Framework Viewsets and Serializers

Despite my original hesitations, I decided to continue using Django Rest Framework and all the features it provides going into this next project. I originally wrote about some of my hesitations with using django in a blog post when I first started using it. While I still agree with a lot of the points I made there, after further use of the technology, I believe that the benefits outweigh the difficulties in using it.

Django Rest Framework is a super-framework on top of Django that allows additional abstraction of the REST API by automatically creating the standard CRUD endpoints through the definition of a viewset and a serializer for a particular model. This eliminates most of the redundant boilerplate associated with defining REST resources and allows creating standard viewsets extremely quickly. The viewset system also ensures consistency between the behavior of all endpoints in the application. The HTTP methods are consistent as are the response codes and data formats. This all allows for creating new backend models very quickly and reliably, leading to an enjoyable developer experience.

Continue to use factoryboy for test development

Factoryboy is a library that streamlines creating data in your test setups. It allows you to create factory classes for your models which define default values which can be overridden for the requirements of your test. This description sounds pedestrian, but these factories are game changing for the ease of writing tests. As long as you define your factories correctly the single statement UserFactory() can define the entire test setup for a user test, and the specific values can be overridden making it clear what is being tested such as UserFactory(is_active=False). I’ve found that using factoryboy makes writing tests feel easy and I will most assuredly keep using it for many python projects into the future.

Loved the article? Hated it? Didn’t even read it?

We’d love to hear from you.