In .NET 4.0 we have several new collection types that have been introduced inside of the System.Collections.Concurrent namespace. The one that we are here to talk about today is the ConcurrentQueue. The ConcurrentQueue is a lock-free thread-safe implementation of the standard queue data structure. A queue is a data structure that has FIFO (First In First Out) semantics, and it has two main operations, enque and dequeue. Enque puts a new item at the end of the queue, and dequeue takes the item off the end of the queue.

You can think about it like a line at a store:

Items go in at the end, and come out of the front. So the first person in the line is the first person out of the line. Like we said, FIFO! In order for us to interact with the ConcurrentQueue, we have two main methods. These are “Enqueue” and “TryDequeue”.

Usage

Enqueue takes a single parameter which is the item to add to the ConcurrentQueue:

var cq = new ConcurrentQueue<string>();

cq.Enqueue("test");

Now we can dequeue this same item by calling TryDequeue. TryDequeue takes a single out parameter of type T and returns a boolean which signifies if an item was dequeued or not:

string value;

if (cq.TryDequeue(out value))

{

Console.WriteLine(value);

}

We also have an additional method at our disposal that allows us to look at the item at the head of the queue without removing it. This is called “TryPeek” and works the same as “TryDequeue”:

string value;

if (cq.TryPeek(out value))

{

Console.WriteLine(value);

}

As with other concurrent collections that we have looked at, there are two properties on the ConcurrentQueue called Count and IsEmpty that allow us to check to see if there are any items in the Queue. As I stated before though, in a multi-threaded implementation the use of these properties are diminished because you really cannot rely on their values unless you are performing locking. The reason for this is that even if I check to see if the queue isn’t empty like this:

cq.Enqueue("test");

string value;

if (!cq.IsEmpty)

{

cq.TryDequeue(out value);

Console.WriteLine(value);

}

Some other thread could have come along in the meantime and take the last item out of the queue, and now my code is broken. This sort of race condition is something that you always have to be on the lookout for when writing multi-threaded applications. In this case, you are much better off just trying to dequeue an item and checking the boolean from that method to determine what you need to do.

If you have been following the previous entries in this series, you may have noticed that the ConcurrentQueue is missing a method that the other collections had. And that is the “Clear” method. The reasoning behind the lack of the a clear method on the ConcurrentQueue is that the lock-less algorithm used to implement the queue does not allow for an atomic clearing of the queue. Since they could not implement an atomic clear, they thought it was best to just leave it off rather than implement a method that might not live up to users expectations.

Also, just like previous data structures, the ConcurrentQueue also supports IEnumerable. This means that it can be iterated over using all standard mechanisms and we can run LINQ queries against it. It too is a moment-in-time snapshot of the queue when “GetEnumerator” is called. This means that anything added to the queue after “GetEnumerator” is called will not be iterated over. This is accomplished by calling “ToList” on the queue and enumerating the entire queue and returning it as a List<T>.

Internal Implementation

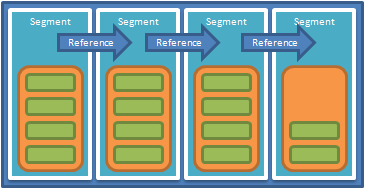

Internally the ConcurrentQueue is stored as a linked list of segments, with each segment holding an array of items, so the head and tail segments (along with number of items in each) are captured and then iterated over in order. The internal implementation of ConcurrentQueue looks something like this (note that this might change in the future):

In this way, when one segment is filled up (currently 32 is the number of items per segment) and a new item is put into the queue, a new segment is allocated and the item is placed in that segment. The index of the first item in the first segment, and the last item in the last segment are stored. By doing this, the algorithm can start at the index of the first item in the first segment, which may not be zero if items have been removed from the queue. And then iterate through each segment in the middle, and stop at the index of the last item in the final segment. Because pointers are kept to the head and tail segments, if a segment is emptied because all of its items have been dequeued, then it simply moves the head to the next segment. All in all, this is very efficient in terms of speed and in terms of garbage collector activity.

Summary

So there you have it, that is the ConcurrentQueue in a nutshell. The ConcurrentQueue is a simple (in use, not in construction!) and yet very powerful addition to your parallel programming toolset. If you need thread-safe FIFO operations, then you can throw your locks away and start using the ConcurrentQueue today. Hey, that rhymed!

Loved the article? Hated it? Didn’t even read it?

We’d love to hear from you.

This is really very nice stuff to understand ConcurrentQueue in System.Collections.Concurrent namespace.

ConcurrentQueue is cool but it has some side effects 🙁

http://connect.microsoft.com/VisualStudio/feedback/details/552868/memory-leak-in-concurrentqueue-t-class-dequeued-enteries-are-still-rooted

It’s “by design”

http://blogs.msdn.com/b/pfxteam/archive/2012/05/08/concurrentqueue-lt-t-gt-holding-on-to-a-few-dequeued-elements.aspx